The two overlaid videos will stick to their corners. You can use a format like 1920x1080 instead of hd1080. The main stream is the background, so just change the size of that by editing size=hd1080 or scale=s=hd1080 in the stream definition. The filtergraph doesn't need any change to adapt to different aspect ratios, but here are some things you might want to adapt. However, be advised that it'll be about twice slower as this way it will scale the background again for each and every frame. If you are stuck with a release without loop filter, here's a workaround: use the loop demuxing option: -loop 1 -i background.jpg and remove the loop filter like scale=s=hd1080. the filter is newer than the 3.0 branch.Ģ3:59:08 #ffmpeg general users are recommended to use a build from git master instead of releases which are mainly for distributors Apparently that's what "general users" are supposed to do anyway: 23:58:35 #ffmpeg FiloSottile: your ffmpeg is too old.

FFMPEG SCALE MAP VIDEO HELP INSTALL

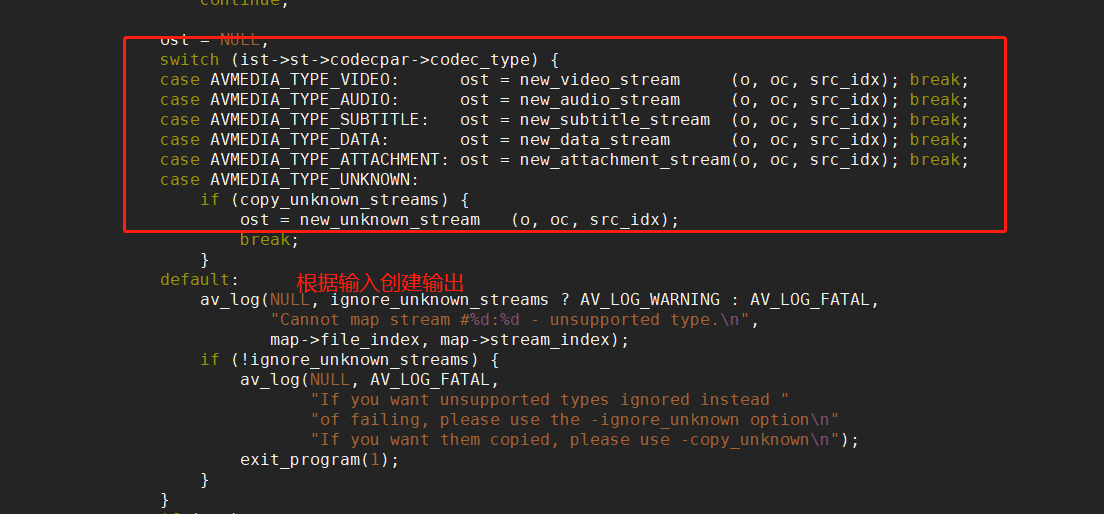

Note that you need to compile FFmpeg from master ( brew install -HEAD ffmpeg) for that to work, or you will see a No such filter: 'loop' error. Here's how you can use a picture instead of a black background: ffmpeg -i slides.mp4 -i speaker.mp4 -i background.jpg -filter_complex " Again, argument order is all: video.mp4 will contain the streams specified by the -map that precede it, so for video and 1:a for audio. shortest=1 makes the output terminate as soon as any input terminates. The first overlay places the video at the bottom right corner using parameters ( x=main_w-overlay_w:y=main_h-overlay_h) and the second at the top left. overlay slaps the second input stream on top of the first at the specified position. We fix that by passing the stream through a filter that sets each timestamp to "timestamp minus timestamp of the first frame" ( PTS-STARTPTS).įinally we use the overlay filter twice. This is often the case when you previously cut the video, and since overlay respects that, one stream would start after the other. Then we take the video streams and, sync them and scale them to the final size we want them while keeping the proportions ( h=-1), generating the and streams.Ī note about that setpts filter: streams can have timestamps that say for example that the first frame of the video is meant to show at second 5. In this graph we first generate a stream of the right color and size to work on with a color source. Each line, separated by, takes zero or more input streams, one or more source/filter, and defines one (or more) output streams. The -filter_complex argument is the graph. The ordering is important, as we will refer to slides.mp4 as and speaker.mp4 as.

i slides.mp4 -i speaker.mp4 are the inputs. There are three parts to the command: inputs, graph and outputs. overlay=shortest=1:x=main_w-overlay_w:y=main_h-overlay_h Here's a first iteration with a black background: ffmpeg -i slides.mp4 -i speaker.mp4 -filter_complex " The desired output is both streams scaled and placed on top of the background at the opposite corners, with audio from one of them, DEFCON style. a camera video of the speaker waving their hands.Colleagues who detect that won't fail to take advantage, so I ended up tasked with crafting an unholy command line to mix the CloudFlare London tech talk videos. In a past life I wrote FFmpeg filters, which has the interesting side effect of making you think of the FFmpeg filtergraph as sane. If they annoy, tell me and I'll get a wiki or something. Usual disclaimer: "technical notes" posts are probably of zero interest to the blog followers and are just meant for Google. Technical notes: mixing speaker and slides recording with FFmpeg

0 kommentar(er)

0 kommentar(er)